Scientific Computing Resources: Overview

To carry out scientific computations, clusters and high-end computing servers based on Intel (Xeon, Itanium) platforms are available for scientific computing. These high-end servers and clusters are accessible from desktops over RRCATNet and accessible over RRCATNet on 24x7 basis. Virtual Network Computing (a simple protocol for remote access to graphical user interfaces) is implemented on computing clusters and servers, which facilitates graphical output on users' desktops.

More than ten high-end computing servers are available for scientific computing. Centralized storage is available to the users, which is configured on redundant servers for improving availability. User's authentication and authorization is done through Open LDAP (Lightweight Directory Access Protocol). User's home directory is configured and available via Network File System (NFS) on all computing servers.

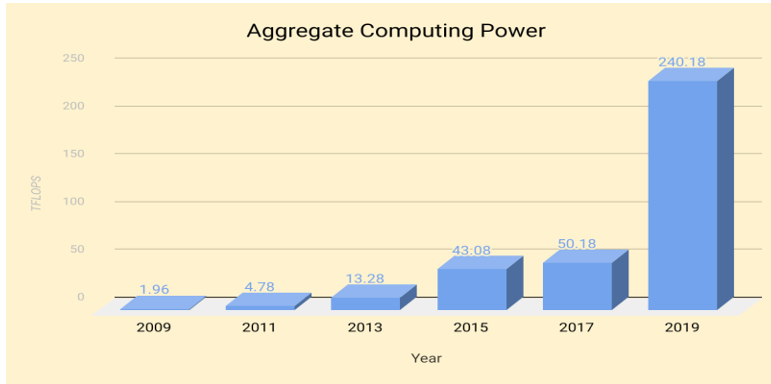

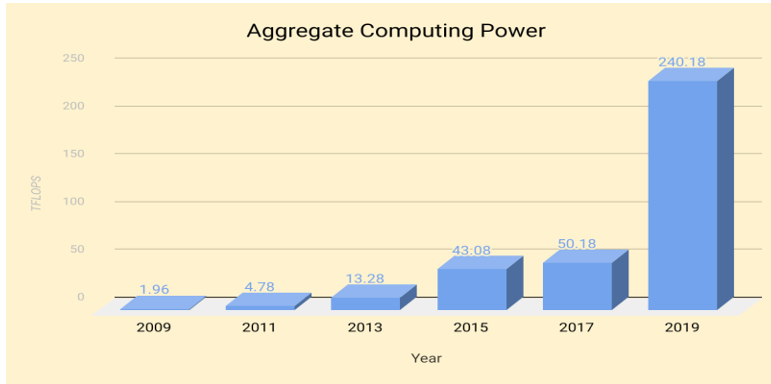

Four clusters with more than 240.18 TF (Tera Flops) sustained computing power is available to the scientific computing users to run their parallel jobs. TORQUE is configured as a cluster resource manager and Maui is configured as job scheduler. TORQUE provides control over batch jobs and distributed computing resources. It is an advanced open-source product based on the original PBS project. Latest compilers and libraries are available to the users for their computational work. User's authentication and authorization is done through Open LDAP (Lightweight Directory Access Protocol). User's home directory is configured and available via Lustre Parallel File System (a high I/O capability file system for cluster) on all clusters.

High Performance Computing Cluster, Kshitij-5 (क्षितिज-5)

High Performance Computing Cluster (HPCC) Kshitij-5 (क्षितिज-5) is the latest addition to the centralized scientific computing facilities available in RRCAT for Scientific and Engineering applications. Kshitij-5 combines the capabilities of Intel Xeon Skylake processors, NVIDIA’s TESLA P100 GPU Accelerators, and Infiniband EDR 100 Gbps interconnect.

Kshitij-5 is equipped with 64 compute nodes housed in 16 enclosures, 8 rack mounted GPU nodes, 4 management servers, 4 file servers, and 100 Gbps Mellanox InfiniBand (IB) switch as interconnect. Total 2048 CPU cores (from two Sixteen Core Intel Xeon Gold @2.60 GHz processors per compute node), along with 24 TB aggregate memory, 16 GPUs (total 57,344 GPU cores) and a SAN system with 300 TB raw storage capacity is available.

Kshitij-5 is a blend of open-source software components and commercially available compilers and libraries. Various software components of this HPCC such as resource manager, parallel file system, user authentication service etc. are configured in fail-over mode.

The Lustre parallel file system has been configured to deliver high-throughput, high-availability and redundancy for enhanced I/O operations. This has been achieved with two nos. of Metadata Servers and four nos. of Object Storage Servers spread across four distinct nodes connected over 100 Gbps Infiniband interconnect . Open-source Inter Process Communication Libraries such as OPENMPI (openmpi-4.0), MVAPICH2 (mvapich2-2.3), and MPICH (mpich-3.3) are configured to support various types of parallel computations. Intel based compiler suite “Parallel Studio XE 2019" has been installed which includes FORTRAN & C compilers, Math Kernel Library, Intel MPI etc. to support enhanced computations.

The sustained computing power delivered by Kshitij-5 HPCC is 190 Teraflops which is achieved through open-source linpack benckmark. After release of Kshitij-5, the aggregate centralized computing power of HPCCs available to scientists and engineers has reached to 240.18 Teraflops.

High Performance Computing Cluster,Kshitij-5 (क्षितिज-5)

Aggregate Computing Power at RRCAT

High Performance Computing Cluster, Kshitij-4 (क्षितिज-4)

High Performance Computing Cluster (HPCC), Kshitij-4 (क्षितिज-4) is commissioned in RRCAT for Scientific and Engineering applications. This HPCC is equipped with 64 HP Blade servers housed in four Blade enclosures, two HP rack mounted servers, and 56 Gbps Mellanox InfiniBand (IB) switches. Total 1536 computing cores, 12 TB aggregate memory and a SAN system for user data storage of 84 TB are also available in Kshitij-4 for advance computations.

This HPCC has been configured by using Open-source software to function in fail-safe mode. Open LDAP has been configured in dual master mode and Resource Manager - TORQUE and Scheduler - MAUI are also implemented in high-availability mode. If any problem occurs in master node, cluster will work uninterruptedly with redundant master node.

High I/O capability is one of the main features of this HPCC, which has been implemented through Lustre Parallel File System. Lustre is configured as file system of Kshitij-4 cluster with FDR InfiniBand Network. Metadata Server (MDS), Four Object Storage Target (OST), Lustre Network (LNET) and Lustre management server are also installed and configured for implementation of this file system.

Inter Process Communication Libraries - MPICH (mpich-3.1.3), MVAPICH2 (mvapich2-2.0.1), OPENMPI (openmpi- 1.8.4) are configured for supporting various types of parallel applications. Intel FORTRAN & C compilers version 14 and Math Kernel Library are configured on this cluster for parallel computations.

This cluster delivered sustained Computing Power of 29.88 Teraflops.

High Performance Computing Cluster, Kshitij-4 (क्षितिज-4)

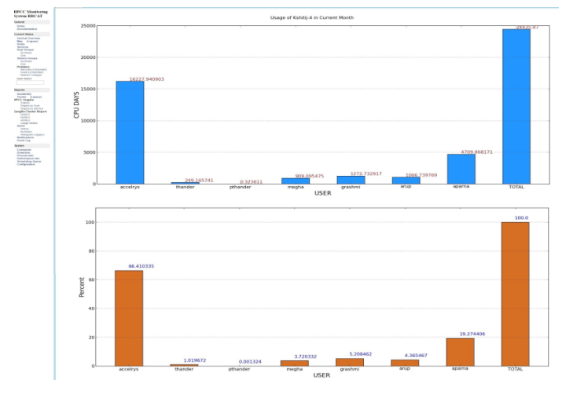

Centralized Monitoring System for High Performance Computing Clusters:

Centralized monitoring System has been developed and deployed on RRCATNet for monitoring of High Performance Computing Clusters at RRCAT. Resource usage and status of services related to current load, current users, disk usage, Portable Batch System (PBS) status and swap usage of High Performance Computing Clusters can be monitored through this system.

The system depicts usage of HPCCs by individual users in graphical format. Python based Django open-source web application framework has been used to compute dynamic usage of individual user of HPCCs in graphical format. This will help to take appropriate action for optimum use of high-end resources by proper assignment of various queues.

Usage of Kshitij-4 High Performance Computing Cluster

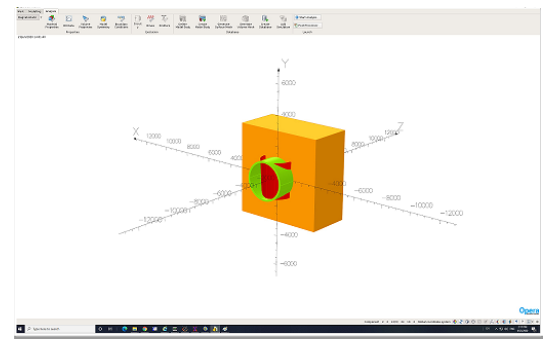

Virtual cluster based solution for OPERA Software

Virtual cluster based setup has been commissioned for OPERA software, which is a well-known magnet designing and simulation software. The setup consists of two number of HP DL 380 G9 servers (Xeon 3.2 GHz octa core, dual processor servers with 128 GB RAM). One of the servers is configured as license server and both servers are configured as application servers.

GUI based 2D and 3D applications of OPERA need high performance industry standard OpenGL run-time libraries. All the three servers are optimized for OpenGL run-time libraries. Users can run their GUI based applications remotely from their desktops on RRCATNet.

Remote execution of OPERA on application server from Window desktop using Cygwin

MATLAB Software

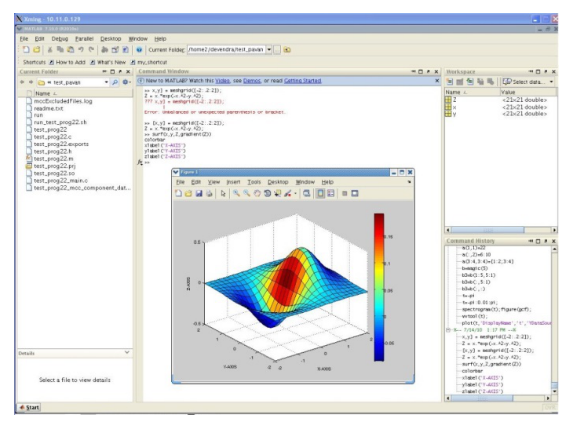

MATLAB software provides an interactive technical computing environment, functions for algorithm development, data analysis and visualization. MATLAB version R2010 has been installed with two network floating licenses and MATLAB version R2015 has been installed with three network floating licenses. This software has been provided as centralized facility to the users.

The installed software package includes many modules and toolboxes in addition to the core package and MATLAB compiler. Users can run their GUI based applications remotely from their desktops on RRCATNet. Free open source software Xming is configured for running applications with OpenGL support from remote Window desktops.

Remote execution of MATLAB on application server from window desktop

Centralized Computing Server Setup:

Centralized computing server setup consists of more than ten computing servers, which are used for scientific computing and engineering applications. Centralized storage is available to the users, which is configured on redundant servers for improving availability. User's authentication and authorization is done through Open LDAP (Lightweight Directory Access Protocol). User's home directory is configured and available via Network File System (NFS) on all computing servers.

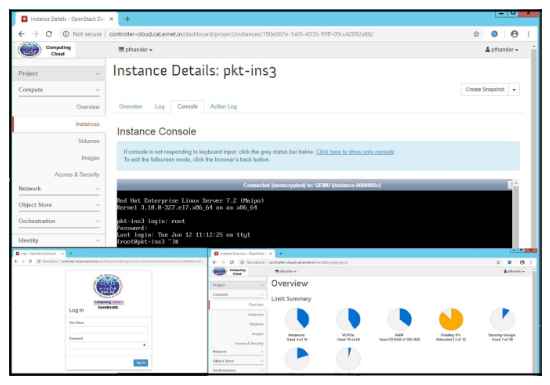

Infrastructure As A Service based Computing cloud setup

IAAS (Infrastructure As A Service) based computing cloud setup is commissioned on RRCATNet to meet short term computational requirement of users. This has enabled scientists and engineers to quickly establish computing environment as per their requirements, by simply using a web browser on their desktop machine.

RRCAT Computing Cloud portal

Compilers, Scientific Libraries and Tools

Following compilers, scientific libraries and tools are made available to the computing users:

- CERNLIB CERN Program Library specialized in data analysis of high energy physics to general purpose numerical analysis.

- Dalton program is designed to allow convenient, automated determination of a large number of molecular properties based on an HF, DFT, MP2, coupled cluster, or MCSCF reference wave function.

- Geant4 is a toolkit for the simulation of the passage of particles through matter. Its areas of application include high energy, nuclear and accelerator physics, as well as studies in medical and space science.

- Grace is a 2D plotting tool for X Windows System and Motif.

- Intel Fortran and C compiler has been installed in the latest computing server.

- Intel Parallel Studio XE 2017 Cluster Edition is a comprehensive suite of software development tools that simplifies the design, development, debugging, and tuning of parallel code while boosting application performance.

- Mathematica is a general computing environment, organizing many algorithmic, visualization, and user interface capabilities within a document-like user interface paradigm. It is a fully integrated environment for technical and scientific computing.

- Math Kernel Library (MKL) has been setup and installed for scientific users. Math Kernel Library is configured on 64-bit computing environment of all clusters and computing servers.

- Mpi4py MPI for Python (mpi4py) provides bindings of the Message Passing Interface (MPI) standard for the Python programming language, allowing any Python program to exploit multiple processors.

- Nag Fortran Library is installed on Intel based servers on Linux platform.

- NAG C Library is a comprehensive collection of functions for the solution of numerical and statistical problems.

- Radia is an add on tool for Mathematica. It is dedicated to 3D magnetostatics computation. It is optimized for the design of undulators and wigglers made with permanent magnets, coils and linear/nonlinear soft magnetic materials.

- VisIt is an open source interactive parallel visualization and graphical analysis tool for viewing scientific data. It can be used to visualize scalar and vector fields defined on 2D and 3D structured and unstructured meshes. VisIt was designed to handle very large data set sizes in the terascale range and yet can also handle small data sets in the kilobyte range.

Parallel and sequential scientific computing applications on clusters and servers

As per requirement of users, various parallel and sequential application packages are successfully ported on clusters and computing servers:

Parallel application software packages on RRCAT Clusters:

| Software Name |

Details |

| ADF |

ADF (Amsterdam Density Functional) is a fortran program for calculations on atoms and molecules (in gas phase or solution).

|

|

COMSOL

|

It is a general-purpose software platform, based on advanced numerical methods, for modeling and simulating physics-based problems. It provides simulation platform with dedicated physics interfaces and tools for electrical, mechanical, fluid flow, and chemical applications.

| | CPMD

|

CPMD (Car-Parrinello Molecular Dynamics) is an ab initio Electronic Structure and Molecular Dynamics Program. It is a parallelized plane wave / pseudopotential implementation of Density Functional Theory, particularly designed for ab-initio molecular dynamics.

| | CRYSTAL |

It is a general-purpose program for the study of crystalline solids. The CRYSTAL program computes the electronic structure of periodic systems within Hartree Fock, density functional or various hybrid approximations. The Bloch functions of the periodic systems are expanded as linear combinations of atom centred Gaussian functions.

| | EPOCH |

It is a plasma physics simulation code which uses the Particle in Cell (PIC) method. In this method, collections of physical particles are represented using a smaller number of pseudoparticles, and the fields generated by the motion of these pseudoparticles are calculated using a finite difference time domain technique on an underlying grid of fixed spatial resolution. Output in EPOCH is handled using the custom designed SDF file format (Self Describing Format).

| | GAMESS |

GAMESS (General Atomic and Molecular Electronic Structure System) is a program for ab initio molecular quantum chemistry. Briefly, GAMESS can compute SCF wavefunctions ranging from RHF, ROHF, UHF, GVB, and MCSCF. Correlation corrections to these SCF wavefunctions include Configuration Interaction, second order perturbation Theory, and Coupled-Cluster approaches, as well as the Density Functional Theory approximation.

| | GAUSSIAN |

It is the latest in the Gaussian series of programs. It provides state-of-the-art capabilities for electronic structure modeling.

| | GROMACS |

It is a versatile package to perform molecular dynamics, i.e. simulate the New- tonian equations of motion for systems with hundreds to millions of particles. It is primarily designed for biochemical molecules like proteins, lipids and nucleic acids that have a lot of complicated bonded interactions, but since GROMACS is extremely fast at calculating the nonbonded interactions (that usually dominate simulations).

| | HPC Packs for ANSYS Fluent |

ANSYS HPC Packs allow scalability in use of high-performance computing to whatever level a simulation requires. A group of ANSYS HPC Packs can be used to enable entry-level parallel processing for multiple simulations or can be combined to offer highly scaled parallel for the most challenging projects.

| | Material Studio |

BIOVIA Pipeline Pilot Materials Studio is a new software solution that allows access and utilization of Materials Studio's premier modeling capabilities within the scientific application.

| | MBTRACK |

MBTRACK (Multi-Bunch TRACKing) is a multi-particle tracking using parallel computation. It combines all the aspects of a single bunch tracking code that uses numerically deduced broadband impedance with those of a multibunch tracking code that treats long-range inter-bunch forces such as RW fields, by properly evaluating multi-turn effects.

| | MedeA- VASP |

MedeA-VASP includes a comprehensive graphical interface to set up, run and analyze VASP calculations. MedeA provides tools for automation of more complex computational tasks like automated convergence runs and spreadsheet-based combinatorial screening. VASP is extremely well tested, robust and proven program for the calculation based on local and semi-local density functional theory.

| | NAMD |

NAMD is a parallel, object-oriented molecular dynamics code designed for high-performance simulation of large biomolecular systems. NAMD scales to hundreds of processors on high-end parallel platforms and tens of processors on commodity clusters using switched fast ethernet. NAMD is file-compatible with AMBER, CHARMM, and X-PLOR.

| | ORBIT_MPI |

It is a particle-incell tracking code that transports bunches of interacting particles through a series of nodes representing elements, effects, or diagnostics that occur in the accelerator lattice. It is used for beam dynamics calculations in high intensity rings.

| | Parallel elegant |

Pelegant (Parallel Electron Generation and Tracking), the parallel version of elegant has proved to be very beneficial to several computationally intensive accelerator research projects. Simulation with a very large number of particles is essential to study detailed performance of advanced accelerators.

| | Quantum ESPRESSO |

It is an integrated suite of Open-Source computer codes for electronic- structure calculations and materials modeling at the nanoscale. It is based on density- functional theory, plane waves, and pseudo potentials.

| | SIESTA |

SIESTA (Spanish Initiative for Electronic Simulations with Thousands of Atoms) is both a method and its computer program implementation, to perform electronic structure calculations and ab initio molecular dynamics simulations of molecules and solids. It uses self-consistent density functional theory (DFT) for the calculation of the electronic structure. In addition to this, it offers options to modify the nuclear variables, such as molecular dynamics simulations, optimization and phonon calculations. It uses a linear combination of atomic orbitals (LCAO) as basis set. There is a choice of direct solver or iterative solver for the eigenvalue problem. The iterative solver scales linear with the number of atoms.

| | SPRKKR |

It is an open-source application of first-principles calculation for the electronic structure, using the KKR method, a variant of Green's function method. It is based on the density functional theory and is applicable to crystals and surfaces. The coherent potential approximation (CPA) is adopted, so It can handle not only periodic systems, but also disordered alloys. It can also handle spin-orbit interaction and non-collinear magnetism.

| | VORPAL |

VORPAL (Versatile Object oriented code for Relativistic Plasma Analysis with Laser) offers a unique combination of physical models to cover the entire range of plasma simulation problems.

| | WIEN2k |

WIEN2k allows to perform electronic structure calculations of solids using density functional theory (DFT). It is based on the full-potential (linearized) augmented plane-wave ((L) APW) + local orbitals (lo) method, one among the most accurate schemes for band structure calculations.

|

Sequential application software packages on computing servers:

| Software Name |

Details |

|

AutoDock |

It is a suite of automated docking tools. It is designed to predict how small molecules, such as substrates or drug candidates, bind to a receptor of known 3D structure. It is a 3D-GUI based Biological Application Software. AutoDock is a suite of automated docking tools.

| | DDSCAT |

It is applying the “discrete dipole approximation” (DDA) to calculate scattering and absorption of electromagnetic waves by targets with arbitrary geometries and complex refractive index. The targets may be isolated entities (e.g., dust particles), but may also be 1-d or 2-d periodic arrays of “target unit cells”, which can be used to study absorption, scattering, and electric fields around arrays of nanostructures.

| | Elegant |

It is a general-purpose code for electron accelerator simulation that has a worldwide user base. Pelegant (Parallel Electron Generation and Tracking), the parallel version of elegant has proved to be very beneficial to several computationally intensive accelerator research projects. Simulation with a very large number of particles is essential to study detailed performance of advanced accelerators.

| | EGSnrc |

EGSnrc (Electron Gamma Shower NRC) is a general-purpose software toolkit that can be applied to build Monte Carlo simulations of coupled electron-photon transport, for particle energies ranging from 1 keV to 10 GeV. It also includes data processing tools to analyze the beam characteristic in detail and generate radiation dose profiles

| | E-SpiReS |

It is aimed at the interpretation of dynamical properties of molecules in fluids from electron spin resonance (ESR) measurements. The code implements an integrated computational approach (ICA) for the calculation of relevant molecular properties that are needed in order to obtain spectral lines.

| | Flair |

It is an advanced user friendly interface for FLUKA to facilitate the editing of FLUKA input files, execution of the code and visualization of the output files. It is based entirely on python and Tkinter. Flair works directly with the input file of FLUKA and is able to read/write all acceptable FLUKA input formats.

| | FLUKA |

It is a fully integrated particle physics MonteCarlo simulation package. It is a general purpose tool for calculations of particle transport and interactions with matter, covering an extended range of applications spanning from proton and electron accelerator shielding to target design, calorimetry, activation, dosimetry, detector design, Accelerator Driven Systems, cosmic rays, neutrino physics, radiotherapy etc. It has many applications in high energy experimental physics and engineering, shielding, detector and telescope design, cosmic ray studies, dosimetry, medical physics and radio- biology.

| | Physics- OPC |

Physics-Optical Propagation Code (OPC) simulates the propagation of light through an optical cavity or beam line. It cooperates with existing gain codes Genesis 1.3 and Medusa to simulate for example Free-Electron Laser (FEL) resonators.

| | QuTiP |

QuTiP (Quantum Toolbox in Python) for simulating the dynamics of open quantum systems. The QuTiP library depends on the excellent Numpy, Scipy, and Cython numerical packages. In addition, graphical output is provided by Matplotlib. QuTiP aims to provide user-friendly and efficient numerical simulations of a wide variety of Hamiltonians, including those with arbitrary time-dependence, commonly found in a wide range of physics applications such as quantum optics, trapped ions, superconducting circuits, and quantum nanomechanical resonators.

| | Warp |

It is an extensively developed open-source particle-in-cell code designed to simulate charged particle beams with high space-charge intensity. This bent-mesh capability allows the code to efficiently simulate space-charge effects in bent accelerator lattices (resolution can be placed where needed) associated with rings and beam transfer lines with dipole bends.

| | XCrySDen |

XCrySDen is a crystalline and molecular structure visualization program aiming at display of isosurfaces and contours, which can be superimposed on crystalline structures and interactively rotated and manipulated.

|

|